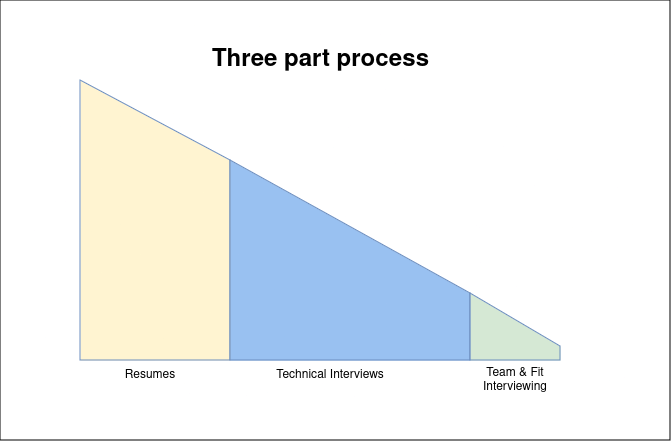

Bringing qualified candidates onto the team requires an investment of developer and leadership time.

When it comes to developers, we’re usually a lot more interested in their ability to write, debug, and improve applications than their ability to be unflappable in an interview.

There’s a lot to say about improving the hiring pipeline and providing team leads, HR and management with better tools.

Let’s look at how we bring on new talent and optimize the process.

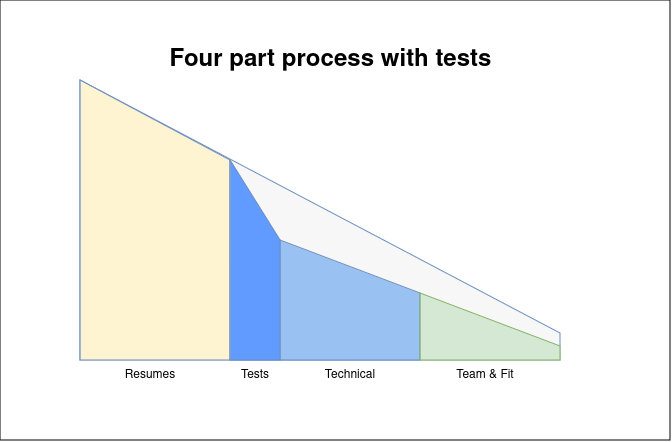

In the approach above, we don’t have a mechanism for technical filtering ahead of technical interviews. The process will struggle to scale.

Contents

- 1 Improving the curve through testing

- 2 Good quality Code Tests are a top line improvement to any pipeline

- 3 A Quick Review of Testing Process

- 4 Questions that are now trivial

- 5 Many ‘complex’ problems can also be solved trivially

- 6 Question types that Copilot struggles with

- 7 Expansive questions provide higher quality results

- 8 Debugging questions are a gold standard for testing questions

- 9 Incorporating AI tasks into the testing process

- 10 Producing a stronger gradient of results

- 11 Finally, improving the hiring pipeline by investing in test tooling

- 12 Not getting the results you’re looking for? Troubleshooting the process of code testing

Improving the curve through testing

Reducing the area under the curve allows us to scale our hiring practices.

A well-designed test should:

- reduce the number of unsuccessful interviews overall

- increase the breadth of resumes we can examine

- allow greater time to be spent on team and fit and deep dive interviews

- and increase the number of candidates suitable to hire and the end of a cycle

Good quality Code Tests are a top line improvement to any pipeline

They’re a great aid to introduce to any organization that has begun to significantly scale.

However, Copilot/ChatGPT and other ML models have the ability to compose reasonable functions from written descriptions, meaning outdated tests are trivially answerable without the candidate performing any action.

2025 brings us to the point of needing to update our testing process to retain the strengths of administering short tests and to ensure we’re picking up talent that can handle complex problems outside of the scope of a query to copilot.

For teams that haven’t run their code tests through Copilot, they may be surprised to learn how well it performs on many types of questions. Code generation is an active area of research, and specialized models are able to obtain satisfactory answers to written prompt questions as evaluated by a human on upwards of 80% of questions.

We’ll explore what makes some questions trival and what makes them challenging.

A Quick Review of Testing Process

Tests are suitable for all roles that involve technical skills. The content will vary and may not involve “code” for some positions.

Versions of the test can be produced for customer-facing developers, data analyst and data scientist roles, UI and UX positions. Generally, the overarching lessons here apply to all technical testing, not just development.

Good tests for candidates typically have a few qualities in common:

a) a quick test: no more than 1-2 hours in length

b) low burden: quickly understandable by hiring lead, reduce workload

c) valuable: the answers should tell us quite a bit about the candidate

Getting the right balance of question types helps improve the results we obtain from our pipeline.

Here’s a classic outline of the “Five Questions” test.

1) an easy question with a straightforward answer

– this is a fast-fail for folks who are obvious non-fits

– being a straightforward question, it reduces test anxiety and gives us better data on potential post-hire performance

– it shouldn’t take more than 5 minutes to answer question

2) a harder question that involves solving something with two distinct tasks

– this question should confound attempts to google/copilot an answer

– it should take 15-30 minutes to complete an answer

3) a question that involves debugging a snippet of code and fixing the errors

– debugging code or data is a critical skill, one that’s harder to evaluate with in-person interviews

– moving off hypothetical / clean room problems helps us explore capabilities

– the candidate being able to spot additional errors and side effects, even if they aren’t readily apparent, is a great to watch for

– it should take 25 minutes to 45 minutes to answer

4) offer commentary about the above code and how you would improve it / add to it

– being able to communicate with other developers about code is a key skill

– knowing what the candidate values is important and quite informative

– knowing what the person regards as ‘problems’ and how they address them tells us about personality fit

– should take not more than 15 minutes to answer

– questions 4 & 5 benefit from not having a reduced time pressure

5) a final question with an optional level of difficulty

– lets us spot candidates with a depth of skill

– should have many deep areas to explore in greater detail in a technical interview

– all of the remaining time (25 to 60 minutes, plus more as desired)

Having a refined design like the Five Questions Template above gives us something we can quickly scan candidates and filter out obvious non-fits on a performance basis rather than assumptive.

During any hiring cycle, we’d like a good return for developer/leadership/HR time spent and have a broad funnel for potential candidates. Excessive commitments of candidate time narrows this field, so a quick test outperforms a long test in the real world.

Tests that build our confidence in a candidate accelerate the in-person process; we can shorten the phase of the interview that establishes basic skill and re-commit that energy to fit and aptitude and a more finely tuned evaluation of particularly valuable skills.

Questions that are now trivial

Code tests have consistently evolved over the last thirty years in order to meet the reality of tools available.

Widespread internet access, quality google results and well-answered stackoverflow questions has rendered the idea of trivia-style questioning impractical.

We usually now test for resolving more complex scenarios, however, not all ‘complex’ scenarios are actually difficult for code generators.

Mostly Basic Python Problems is a benchmark, curated by Google Research, which consists of around 1,000 crowd-sourced Python programming problems, designed to be solvable by entry level programmers, and was released as part of the Program Synthesis with Large Language Models paper.

Austin et. al. (2021). Program Synthesis with Large Language Models.

https://arxiv.org/abs/2108.07732

Each problem in the dataset consists of a task description, code solution and 3 automated test cases.

Example Questions:

Question #638: Write a function to calculate wind chill index.

Question #699: Write a python function to find the minimum number of swaps required to convert one binary string to another.

The highest ranked LLM had an accuracy of 93.2% at time of writing.

Many ‘complex’ problems can also be solved trivially

Solvability is not limited to simple problems: if the language model can correctly decompose a question into reasonable steps, it can still rapidly complete even large problems with a correct response.

Let’s review some question styles where we’ve “increased” the difficulty, but which remain trivially solvable for commercial LLMs.

Question A)

“Write a function in javascript that takes as input a number N, randomly selects 5 animals, one of which is a bird, from a list of 15 animals, and returns up to a maximum of N array of arrays of different possible combinations of animal names in a random order.”

It’s difficult to obtain an answer to this just by using a search engine, because the combination of requirements is sufficiently unique that it does not produce matching questions.

Here’s what Microsoft Copilot, AI chatbot developed by Microsoft, based on the GPT-4, handled that question in 2024.

function getRandomCombinations(N) {

const animals = ["Lion", "Tiger", "Elephant", "Giraffe", "Zebra", "Kangaroo", "Panda", "Koala", "Penguin", "Eagle", "Parrot", "Owl", "Falcon", "Sparrow", "Peacock"];

const birdList = ["Penguin", "Eagle", "Parrot", "Owl", "Falcon", "Sparrow", "Peacock"];

function getRandomInt(min, max) {

return Math.floor(Math.random() * (max - min + 1)) + min;

}

function shuffleArray(array) {

for (let i = array.length - 1; i > 0; i--) {

const j = getRandomInt(0, i);

[array[i], array[j]] = [array[j], array[i]];

}

}

function getRandomAnimals() {

const selectedAnimals = [];

const bird = birdList[getRandomInt(0, birdList.length - 1)];

selectedAnimals.push(bird);

const remainingAnimals = animals.filter(animal => animal !== bird);

shuffleArray(remainingAnimals);

for (let i = 0; i < 4; i++) {

selectedAnimals.push(remainingAnimals[i]);

}

shuffleArray(selectedAnimals);

return selectedAnimals;

}

const combinations = [];

for (let i = 0; i < N; i++) {

combinations.push(getRandomAnimals());

}

return combinations;

}

// Example usage:

console.log(getRandomCombinations(3));Copilot’s answer is correct and insufficiently distinguishable from work done by humans.

The inclusion of undefined components did not pose issues.

Essentially, models that can handle composition of arbitrary functions can answer many questions, especially where there is a strong similarity to well-published questions.

Questions that resemble textbook questions or homework questions asked on stackoverflow are particularly trivial, even if their combination is unique with distinct elements.

Unfortunately, these questions are quite common on code tests for candidates.

Because answers to this appear human-written and are reasonably high quality, we’re not going to get far unless we change our approach to what we test and why we test it.

Question types that Copilot struggles with

We can produce questions the AI will struggle to answer.

Question B)

“Take this snippet and make the cursor appear as a hand when it is over the circle and not the square.”

<br>

<hr>

<div id="box">

<div class="square">

1

</div>

<div class="circle">

2

</div>

</div>

<STYLE>

#box .square {

border-radius: 16px;

width: 32px; height: 32px;

background-color: limegreen;

position: absolute;

top: 15px;

left: 15px;

cursor: pointer;

}

#box .circle {

width: 32px; height: 32px;

background-color: pink;

position: absolute;

}

#box {

position: absolute;

}

</STYLE>Copilot will answer that it should move border-radius from element 2 to element 1, not understanding that element 1 will appear as a circle to the user and the class names have no bearing on the element.

JSfiddle: https://jsfiddle.net/ydkmja4z/

This inability to understand which element is a circle is a limitation of Copilot, not ambiguity. As we’ve seen before, Copilot can handle ambiguity quite well.

Further questions demonstrate Copilot’s inability to reduce the question to a solvable problem.

Helpfully, in the answer above, Copilot identifies the rubric it uses to determine if something appears circular, which is to examine the css for (equal width and height with a border-radius set to 50%).

In general, LLM models have struggled with consequential outcomes ( https://arxiv.org/abs/1905.07830 ) even when trained on those specific outcomes. And while progress has been made, in general, visual outcomes from interactions, event model and flow across a complex events, debugging and cross-domain logic are weak points.

Expansive questions provide higher quality results

In general, access to LLMs exacerbate a weakness present in many testing scenarios, which is questioning of candidates tests only a surface knowledge of a topic.

The characteristic of being answerable through repetition rather than understanding or effort is also what makes a question trivial to copilot. Refining our questions to a higher quality satisfies both the need for better questions and produces challenging results for LLMs.

In the next question, we’ll explore a question that not only confounds Copilot but also produces a greater insight into the experience and competence of the candidate.

Question C)

“There is an error in this httpd configuration that will arise under common circumstances if the container and services is restarted but the connection to the internet is delayed.

What is that error, why does it occur, and how would you resolve it?”

NameVirtualHost 192.168.1.200:80

<VirtualHost mytestsite.tld:80>

ServerAdmin general@mytestsite.tld

DocumentRoot /var/www/vhosts/mytestsite.tld/htdocs

ServerName mytestsite.tld

ServerAlias www.mytestsite.tld

ErrorLog logs/mytestsite.tld-error_log

CustomLog logs/mytestsite.tld-access_log common

DirectoryIndex index.html index.htm welcome.html

</VirtualHost>

<VirtualHost testsite.tld:80>

ServerAdmin general@testsite.tld

DocumentRoot /var/www/vhosts/testsite.tld/htdocs

ServerName testsite.tld

ServerAlias www.testsite.tld

ErrorLog logs/testsite.tld-error_log

CustomLog logs/testsite.tld-access_log common

DirectoryIndex index.html index.htm welcome.html

</VirtualHost>

How would a candidate go about answering this question?

To solve it, if they cannot identify the issue from experience, the candidate can perform a few tests starting and stopping a HTTPD server under certain circumstances. This is an appropriate question for positions that include dev-ops or system operation tasks, but anyone with experience with any form of containerization (docker, cloud technologies, virtualization) or other backend technologies have the skill to test the conditions, draw a reasonable inference about the error, test a solution and offer an explanation in a reasonable timeframe.

Introducing a requirement to a question to learn, project a conclusion, and test, especially repeatedly, in order to solve a problem, provides immediate challenges to LLM-based answers.

Outside of answers enhanced through Retrieval-augmented generation, LLM systems like copilot cannot be said to learn during an interaction. Such systems can be prompted to try new prompts to retrieve/resolve a correct answer or a prompt can be refined to further approach a correct answer, but will struggle if the corpus used for training contains errors.

Similarly, the system will produce unsatisfactory answers when faced with a problem where the understanding of it must change as it is explored, especially without the means to perceive change.

Regardless of how the candidate solves the problem, the answer the candidate gives about other approaches allows us to explore with them the advantages and drawbacks.

This style of question is a great one for asking candidates to propose more solutions, including examples of how they would implement those solutions, including code or configuration.

The questions that can offer a launching point for topics during an in person interview are interesting.

Debugging questions are a gold standard for testing questions

Complex tasks, like genuinely debugging a hidden issue, can fall outside of copilot’s scope by a considerable margin.

This is a debugging style question. This kind of bug is invisible to AI-assist applications like copilot.

Question D)

Here is a contrived example one page calendar in plain, standards-compliant HTML and javascript.

There are a set of bugs in this application that affect what is being rendered in these two subsequent months – one in the generation of the sample data and one in the display of the calendar months.

Without significantly rewriting the application, correct the specific bugs, and explain what caused them in the first place.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<style>

body { height: 100vh; background-color: #f0f0f0; padding: 20px; box-sizing: border-box; }

#calendar { display: block; position: static; }

.calendar-container { display: flex; flex-direction: column; justify-content: center; align-items: center; width: 100%; max-width: 1200px; margin: 0 auto; }

.calendar { width: 100%; }

.month { display: grid; grid-template-columns: repeat(7, 1fr); gap: 1px; border: 2px solid #000; margin-bottom: 20px; }

.month-header { grid-column: span 7; text-align: center; font-weight: bold; padding: 10px; background-color: #ddd; }

.day-header { font-weight: bold; text-align: center; padding: 10px; background-color: #eee; }

.day { border: 1px solid #ddd; background-color: #fff; box-sizing: border-box; padding: 8px; min-height: 100px; display: flex; flex-direction: column; }

.date-header { font-weight: bold; margin-bottom: 8px; }

.staff { margin-bottom: 4px; }

</style>

<title>Calendar for Karaoke Night - This month and next month</title>

</head>

<body>

<section id="calendar">

<h3>Karaoke Hosts - this month and next month</h3>

<div class="calendar-container">

<div class="calendar" id="calendar"></div>

</div>

</section>

<script>

// Generate a UUID (one-liner function)

const generateUUID = () => 'xxxxxxxx-xxxx-4xxx-yxxx-xxxxxxxxxxxx'.replace(/[xy]/g, c => (c === 'x' ? (Math.random() * 16 | 0) : ((Math.random() * 16 | 0) & 0x3 | 0x8)).toString(16));

// generates an example of a JSON staffBookings structure

function loadStaffBookings() {

// Example structure created by loadStaffBookings:

// {

// "startDate": {

// "bookingID": { "staffName": "Alice", "period": "afternoon" },

// "bookingID": { "staffName": "Bob", "period": "evening" }

// },

// "startDate": { ... }

// }

const staffNames =

Array.from({ length: 10 }, () => {

const uuid = generateUUID();

const names = ['Alice', 'Bob', 'Charlie', 'Diana', 'Eve', 'Frank', 'Grace', 'Heidi', 'Ivan', 'Judy'];

return { [uuid]: { staffName: names.shift() } };

}).reduce((acc, curr) => ({ ...acc, ...curr }), {});

const bookings = {};

const today = new Date();

for (let i = -30; i <= 60; i++) {

const date = new Date();

date.setDate(today.getDate() + i);

const dateString = date.toISOString().split('T')[0];

bookings[dateString] = {};

const periods = ['afternoon', 'evening'];

const filledPeriods = Math.random() < 0.9 ? periods : [periods[Math.floor(Math.random() * periods.length)]];

filledPeriods.forEach(period => {

const bookingID = generateUUID();

const staffID = Object.keys(staffNames)[Math.floor(Math.random() * Object.keys(staffNames).length)];

bookings[dateString][bookingID] = { staffID, period, staffName: staffNames[staffID].staffName };

});

}

window.application = window.application || {};

window.application.staffBookings = bookings;

}

// Generate calendar with staff bookings

function generateCalendar(startDate, numMonths, staffBookings) {

const calendarContainer = document.querySelector('.calendar-container .calendar');

const daysOfWeek = ['Monday', 'Tuesday', 'Wednesday', 'Thursday', 'Friday', 'Saturday', 'Sunday'];

const benchmarks = [];

let currentDate = new Date(startDate);

for (let i = 0; i < numMonths; i++) {

const monthContainer = document.createElement('div');

monthContainer.className = 'month';

const monthHeader = document.createElement('div');

monthHeader.className = 'month-header';

monthHeader.textContent = `${currentDate.toLocaleString('default', { month: 'long' })} ${currentDate.getFullYear()}`;

monthContainer.appendChild(monthHeader);

// Add day of the week headers

daysOfWeek.forEach(day => {

const dayHeader = document.createElement('div');

dayHeader.className = 'day-header';

dayHeader.textContent = day;

monthContainer.appendChild(dayHeader);

});

const monthStart = new Date(currentDate.getFullYear(), currentDate.getMonth(), 1);

const monthEnd = new Date(currentDate.getFullYear(), currentDate.getMonth() + 1, 0);

let startDay = monthStart.getDay();

startDay = startDay === 0 ? 6 : startDay - 1; // Adjust to have Monday as the first day

for (let blank = 0; blank < startDay; blank++) {

const blankDiv = document.createElement('div');

blankDiv.className = 'day';

monthContainer.appendChild(blankDiv);

}

for (let day = 1; day <= monthEnd.getDate(); day++) {

const dayDiv = document.createElement('div');

dayDiv.className = 'day';

const dateHeader = document.createElement('div');

dateHeader.className = 'date-header';

dateHeader.textContent = `${day}`;

dayDiv.appendChild(dateHeader);

const dateString = new Date(currentDate.getFullYear(), currentDate.getMonth(), day).toISOString().split('T')[0];

if (staffBookings[dateString]) {

Object.values(staffBookings[dateString]).forEach(booking => {

const staffDiv = document.createElement('div');

staffDiv.className = 'staff';

staffDiv.textContent = `${booking.staffName} (${booking.period})`;

dayDiv.appendChild(staffDiv);

});

}

monthContainer.appendChild(dayDiv);

currentDate.setDate(currentDate.getDate() + 1);

}

calendarContainer.appendChild(monthContainer);

currentDate.setMonth(currentDate.getMonth() + 1); // Move to the next month

currentDate.setDate(1); // Reset to the first day of the month

}

}

document.addEventListener('DOMContentLoaded', () => {

loadStaffBookings();

setTimeout(() => {

const today = new Date();

generateCalendar(today, 2, window.application.staffBookings); // Generate calendar using staff bookings

}, 200);

});

</script>

</body>

</html>

To solve this question, the candidate has to refactor the generation of the staffBookings sample data (instead of a random series of names, Alice is the only name that appears) and fix the fact that the display function skips forward a whole month (instead of calendars for June & July, it would generate calendars for June & August).

Most likely, to spot the errors, the candidate will need to employ breakpoints and step through the code, because the error only emerges mid-loop.

The task is straightforward to debug, but like most debugging efforts, it might take the candidate a little while as they try different lines of inquiry.

Remember, it’s best to test all questions before adding them to make sure it’s answerable in a reasonable timeframe by those who don’t know the answer.

Modifying the question for other languages or frameworks

We can explore or reframe this style of questions in many different ways, frameworks and styles.

We’re still looking for something contrived to keep the user’s setup time down.

A real application might swap DateTime for luxon, the string interpolation may be handled by a library, and of course we wouldn’t be generating a sample JSON structure to work with.

(Some very quick examples in Angular and Svelte in the spoiler tags below)

While we can introduce framework specific issues to compound the complexity of the problem, we have a goal to constrain realistic time spent to less than forty minutes on this question.

Good questions are about fundamental debugging skills, and expressible in many ways.

Using naive and un-optimized code within our questions provide excellent launching points for deeper conversations and candidate insight.

Incorporating AI tasks into the testing process

For organizations that include the use of AI tools to generate code for their projects as a core function of how they operate, it can be useful to directly test a candidate’s ability to operate with AI generated code.

Here’s a solvable task that will cause some LLM models to loop through a series of bad results, requiring human intervention.

Question D (1st alternative):

Suppose you have a snippet of HTML that is defined by an application not operated by your organization. You have permission to only adjust the contents of style=”” attribute.

Adjust the given prompt so that Copilot will reliably produce a correct answer, even if unprompted with other data on a fresh session. Copilot must not introduce new tags of any kind and correctly return a properly styled table that meets the defined requirements of the original prompt.

Optionally, ensure the prompt also produces a visually pleasing set of style attributes in screen sizes above and below 800px;

<!--

PROMPT:

Cause this table with inline styles to hide the center column and place the two cells stacked vertically at screen sizes smaller than 760 pixels wide. No new tags of any kind can be introduced and existing tags cannot be renamed.

-->

<div style="width: 800px;">

<table style="border-collapse: collapse; width: 100%;"> <tbody> <tr>

<td style="width: 50;">An arbitrarily long series of words about text</td>

<td style=""> </td>

<td style="width: 50%;">And other criteria that can exist without complication.</td>

</tr> </tbody> </table>

</div>

Each task should have some complicating factor that frustrates an LLM’s naive approach and requires the user to critically think about what they are designing and trying to do when they write their prompt.

In the above example, that wrinkle is the restriction on new tags. It’s a quick solution (an experienced front-end developer has many tools to resolve this without javascript or media queries).

This type of exercise can be expanded to other tasks, such as “adjust this prompt so that, given an arbitrary database definition (sample included below), copilot will generate a class with a complete set of filter methods for every field that will only allow permitted values.”

In the database example, the wrinkle we could introduce is a JSON field that is described in a way that the LLM does not understand.

A lack of expertise here will become especially informative when the prompt is, subtly, asking for the wrong thing and the user does not know how to describe what is they are trying to achieve.

By asking a question in this style from a domain outside of a user’s expertise, the question will also give us an assessment of the candidate’s ability not just to use LLM models, but to learn on their own and critically evaluate their output.

Producing a stronger gradient of results

Sharp-eyed readers will have noticed Question D (the calendar question prior to the AI Prompt writing question) has more shortcomings than just the listed bugs.

When developing the final advanced questions (Question E), remember to seed them with other issues that will not appear on a cursory inspection or test run.

Question E)

Once you have fixed the identified the bugs, identify any remaining bugs or edge cases, and offer some thoughts about the coding techniques and styles that made the original bug challenging to spot and whether there are other changes you would make to improve the legibility of the code.

With that – an advanced question with a deep dive component – we have a strong coding test that is quick to filter out obvious non-fits, with a very deep gradient for differentiating skill.

Finally, improving the hiring pipeline by investing in test tooling

A quality test with great questions greatly enhances the process. We can also invest time in tooling.

What we’re really hiring for is the ability to do work and solve complex issues, so tools that allow us to perform deeper assessments are worth examining.

Improving our tooling around testing candidates will make

Playpen and jsFiddle: At the most basic level, we can supply candidates with tests using services like jsFiddle and other playpen examples. These take not much more time to set up, and if set to private, are reasonably secure.

Screen Shares: when we have capacity, we can do developer screen shares. Since the end of the pandemic, it seems less necessary. We usually find administering the short test and then spending the time in person talking with a real IDE open to be more productive than adding a screen share session back in.

Containerized Test Environment: At a higher level, we can supply a candidate with a rough approximate of what the workplace development environment looks like in a container, complete with buildscripts, and provide them with tasks within that environment. Credentials and instructions are easy to set up and reset.

Of course, it’s important that the account we test under have Full Relationship Isolation for security purposes, but once that is accomplished, we gain the following things:

- Testing against very large data sets (100 gb+) which are impractical by email

- Testing candidate ability to navigate build environments

- Broad-spectrum questions that touch on multiple systems (from backend to front-end)

- Lower work burden to create “depth” questions from whole cloth (questions 3 and 5)

- A very real sense how quickly the developer could pick up technology and paradigms at the workplace, and, from question 4, their opinions about whether they would like working with them

Additionally, because the developer does not have to perform routine tasks like setting up an environment or downloading components, we can spend more of the test time deep diving into hard questions.

Containerized environments are beneficial for both developers and non-developers

The uncapped data component is useful for any positions that test developer understanding of scalability and performance, and profoundly useful for testing data analyst and engineer skills.

Questions like “this environment performs in aberrant fashion. Identify the issue from the logs and resolve it,” become possible in containerized settings.

A ready-made containerized application is also exceptional for front-end developers.

We’re a bit less interested in “explain border box to me in this constructed example” and a lot more interested in “there are visual defects in this page. Identify and correct them,” or “this page renders poorly and slowly on mobile devices. Explore the animation techniques and optimize them.”

Since the advent of Copilot, we’ve cared less about knowing a specific framework or technology, as it accelerates learning on unfamiliar formats. However, being able to present someone with a simple task in a legacy framework (YUI or, say, ZK) and seeing how they handle it can be a useful data point.

Techniques & Terms

Not getting the results you’re looking for? Troubleshooting the process of code testing

For those who already have a code test, or have tried to implement one and are running into issues in the process, here’s a quick troubleshooting Q&A derived from some experiences with different outcomes.

END